ACID, @Transactional, Transaction isolation levels, L1 and L2 Cache, Redis

ACID:

Atomicity: Atomicity ensures that a transaction is treated as a single unit of work, which either completes entirely or fails entirely. There is no partial completion. For example, consider a bank transfer where money is withdrawn from one account and deposited into another. If the deposit fails, the withdrawal should be rolled back to maintain the atomicity of the transaction.

Consistency: Consistency ensures that a transaction transforms the database from one consistent state to another consistent state. It guarantees that the integrity constraints, such as foreign key relationships or unique constraints, are not violated. For example, if a transaction increases a customer's balance, the new balance should reflect the correct amount after the transaction.

Isolation: Isolation ensures that the concurrent execution of transactions produces a result that is equivalent to some serial execution of the transactions. This means that transactions are isolated from each other until they are completed. For example, if two transactions are executed concurrently, the result should be the same as if they were executed sequentially.

Durability: Durability ensures that once a transaction is committed, its changes are permanently saved in the database, even in the event of a system failure. For example, if a transaction updates a customer's address, the new address should persist even if the system crashes after the transaction is committed.

A - Atomicity: All or Nothing. Atomicity is also known as the ‘All or nothing rule’.

A - Atomicity: iki veya cox emeliyyat ya hamisi yada hec biri icrea olunmur. Ugurlu emeliyyatlar fiks olunur, lakin ugursuz emeliyyat baw verdikde rollback baw verir.

All queries must succeed. If one fails all should roll back.

An atomic transaction is a transaction that will rollback all queries if one of the queries failed.

Atomicity takes individual operations and turns them into an all-or-nothing unit of work, succeeding if and only if all contained operations succeed.

A transaction might encapsulate a state change (unless it is a read-only one). A transaction must always leave the system in a consistent state, no matter how many concurrent transactions are interleaved at any given time.

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

public class AtomicityExample {

public static void main(String[] args) {

String url = "jdbc:mysql://localhost:3306/bank";

String username = "your_username";

String password = "your_password";

try (Connection connection = DriverManager.getConnection(url, username, password);

Statement statement = connection.createStatement()) {

connection.setAutoCommit(false); // Start transaction

// Simulate a money transfer from account 1 to account 2

int account1Balance = 1000;

int account2Balance = 500;

// Deduct amount from account 1

statement.executeUpdate("UPDATE accounts SET balance = balance - 100 WHERE id = 1");

// Simulate a failure (e.g., server crash) before adding amount to account 2

if (true) {

throw new RuntimeException("Simulated failure");

}

// Add amount to account 2

statement.executeUpdate("UPDATE accounts SET balance = balance + 100 WHERE id = 2");

connection.commit(); // Commit transaction

System.out.println("Transaction committed successfully.");

} catch (SQLException e) {

e.printStackTrace();

try {

if (connection != null) {

connection.rollback(); // Rollback transaction

System.out.println("Transaction rolled back.");

}

} catch (SQLException ex) {

ex.printStackTrace();

}

}

}

}

C - Consistency: her hansisa xeta baw verdikde sistem evvelki yeni ugursuz baw vermiw transaksiyanin evveline qayidir. Eger transaksiya ugurlu bawa catibsa o zaman sistem butun transaksiyalarin ugurlu baw tutmasini yoxlayir.

Consistency means that constraints are enforced for every committed transaction. That implies that all Keys, Data types, Checks and Trigger are successful and no constraint violation is triggered.

Consistency in the context of database transactions refers to the requirement that a transaction must transform the database from one consistent state to another consistent state. This means that the database should abide by all the constraints, rules, and relationships defined in the database schema before and after the transaction.

Consistency is closely related to the concept of maintaining data integrity. Data integrity ensures that the data stored in a database is accurate, valid, and reliable. Consistency guarantees that the database remains in a valid state at all times, even when multiple transactions are executed concurrently.

To ensure consistency, databases often use constraints such as foreign key constraints, unique constraints, and check constraints. These constraints enforce rules that prevent invalid or inconsistent data from being inserted or updated in the database.

For example, consider a database table that stores information about students and their courses. If a student enrolls in a course, the database must ensure that both the student and the course exist in the database before adding the enrollment record. This ensures consistency by maintaining the integrity of the relationships between students and courses.

In summary, consistency in database transactions ensures that the database remains in a valid and reliable state, adhering to all constraints and rules defined in the database schema.

I - Isolation: transaksiya baw verdiyi zaman burada iwtirak eden butun obyektler sinxronize olunmalidirlar.

Can my inflight transaction see changes made by other transactions?

*Read phenomena

*Isolation levels

Transactions require concurrency control mechanisms, and they guarantee correctness even when being interleaved. Isolation brings us the benefit of hiding uncommitted state changes from the outside world, as failing transactions shouldn’t ever corrupt the state of the system. Isolation is achieved through concurrency control using pessimistic or optimistic locking mechanisms.

Isolation in the context of database transactions refers to the property that ensures that the concurrent execution of transactions produces a result that is equivalent to some serial execution of the transactions. In other words, transactions are executed as if they were the only transactions running on the database, even when multiple transactions are executing concurrently.

Isolation is important because it prevents transactions from interfering with each other, which could lead to data inconsistency or incorrect results. The isolation level of a transaction determines how it interacts with other transactions running concurrently.

There are different isolation levels defined by the SQL standard, such as Read Uncommitted, Read Committed, Repeatable Read, and Serializable, each offering a different level of isolation and trade-offs in terms of performance and consistency.

To illustrate the concept of isolation, let's consider an example involving two transactions:

- Transaction A transfers $100 from account A to account B.

- Transaction B checks the balance of account A.

Depending on the isolation level, different outcomes can occur:

- If both transactions run at the Read Committed isolation level, transaction B will see the updated balance after transaction A commits.

- If transaction B runs at the Repeatable Read isolation level, it will see the balance of account A as it was before transaction A started, even after transaction A commits.

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

public class IsolationExample {

public static void main(String[] args) {

String url = "jdbc:mysql://localhost:3306/bank";

String username = "your_username";

String password = "your_password";

try (Connection connection1 = DriverManager.getConnection(url, username, password);

Connection connection2 = DriverManager.getConnection(url, username, password);

Statement statement1 = connection1.createStatement();

Statement statement2 = connection2.createStatement()) {

connection1.setAutoCommit(false); // Start transaction 1

connection2.setAutoCommit(false); // Start transaction 2

// Transaction 1: Transfer $100 from account A to account B

statement1.executeUpdate("UPDATE accounts SET balance = balance - 100 WHERE id = 1");

statement1.executeUpdate("UPDATE accounts SET balance = balance + 100 WHERE id = 2");

// Transaction 2: Check the balance of account A

int balanceBefore = 0;

int balanceAfter = 0;

// Read balance of account A before and after transaction 1

balanceBefore = getBalance(statement2, 1);

connection1.commit(); // Commit transaction 1

balanceAfter = getBalance(statement2, 1);

System.out.println("Balance of account A before transaction 1: $" + balanceBefore);

System.out.println("Balance of account A after transaction 1: $" + balanceAfter);

connection2.commit(); // Commit transaction 2

System.out.println("Transactions committed successfully.");

} catch (SQLException e) {

e.printStackTrace();

try {

connection1.rollback(); // Rollback transaction 1

connection2.rollback(); // Rollback transaction 2

System.out.println("Transactions rolled back.");

} catch (SQLException ex) {

ex.printStackTrace();

}

}

}

private static int getBalance(Statement statement, int accountId) throws SQLException {

int balance = 0;

var resultSet = statement.executeQuery("SELECT balance FROM accounts WHERE id = " + accountId);

if (resultSet.next()) {

balance = resultSet.getInt("balance");

}

resultSet.close();

return balance;

}

}

D - Durablity: transaksiya zamani butun baw vermiw dayiwiklikler DB-da saxlanilmalidir. Bu oz novbesinde sistemi berpa etmeye imkan verir.

A successful transaction must permanently change the state of a system, and before ending it, the state changes are recorded in a persisted transaction log. If our system is suddenly affected by a system crash or a power outage, then all unfinished committed transactions may be replayed.

For messaging systems like JMS, transactions are not mandatory. That’s why we have non-transacted acknowledgement modes.

File system operations are usually non-managed, but if your business requirements demand transaction file operations, you might make use a tool such as XADisk.

While messaging and file systems use transactions optionally, for database management systems transactions are compulsory.

Durability in the context of database transactions refers to the property that ensures once a transaction is committed, its effects are permanent and will not be lost, even in the event of a system failure. Durability guarantees that the changes made by a committed transaction are recorded in non-volatile storage, such as a disk, and can be recovered in the event of a crash or restart.

To ensure durability, databases use techniques like write-ahead logging (WAL) and transaction logging. These techniques ensure that before a transaction is considered committed, its changes are safely stored in a log file on disk. In case of a failure, the database can use the log file to recover the committed transactions and bring the database back to a consistent state.

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

public class DurabilityExample {

public static void main(String[] args) {

String url = "jdbc:mysql://localhost:3306/bank";

String username = "your_username";

String password = "your_password";

try (Connection connection = DriverManager.getConnection(url, username, password);

Statement statement = connection.createStatement()) {

connection.setAutoCommit(false); // Start transaction

// Update the balance of account 1

statement.executeUpdate("UPDATE accounts SET balance = balance + 100 WHERE id = 1");

// Commit the transaction

connection.commit();

// Simulate a system failure (e.g., server crash) after committing the transaction

if (true) {

throw new RuntimeException("Simulated system failure");

}

} catch (SQLException e) {

e.printStackTrace();

try {

if (connection != null) {

connection.rollback(); // Rollback transaction

System.out.println("Transaction rolled back.");

}

} catch (SQLException ex) {

ex.printStackTrace();

}

}

}

}

Isolation levels:

query for knowing isolation level of db: select @@transaction_isolation;

As we know, in order to maintain consistency in a database, it follows ACID properties. Among these four properties (Atomicity, Consistency, Isolation, and Durability) Isolation determines how transaction integrity is visible to other users and systems. It means that a transaction should take place in a system in such a way that it is the only transaction that is accessing the resources in a database system.

Isolation levels define the degree to which a transaction must be isolated from the data modifications made by any other transaction in the database system. A transaction isolation level is defined by the following phenomena:

- Dirty Read – A Dirty read is a situation when a transaction reads data that has not yet been committed. For example, Let’s say transaction 1 updates a row and leaves it uncommitted, meanwhile, Transaction 2 reads the updated row. If transaction 1 rolls back the change, transaction 2 will have read data that is considered never to have existed.

- Non Repeatable read – Non Repeatable read occurs when a transaction reads the same row twice and gets a different value each time. For example, suppose transaction T1 reads data. Due to concurrency, another transaction T2 updates the same data and commit, Now if transaction T1 rereads the same data, it will retrieve a different value.

- Phantom Read – Phantom Read occurs when two same queries are executed, but the rows retrieved by the two, are different. For example, suppose transaction T1 retrieves a set of rows that satisfy some search criteria. Now, Transaction T2 generates some new rows that match the search criteria for transaction T1. If transaction T1 re-executes the statement that reads the rows, it gets a different set of rows this time.

Based on these phenomena, The SQL standard defines four isolation levels:

- Read Uncommitted – Read Uncommitted is the lowest isolation level. In this level, one transaction may read not yet committed changes made by other transactions, thereby allowing dirty reads. At this level, transactions are not isolated from each other.

- Read Committed – This isolation level guarantees that any data read is committed at the moment it is read. Thus it does not allow dirty read. The transaction holds a read or write lock on the current row, and thus prevents other transactions from reading, updating, or deleting it.

- Repeatable Read – This is the most restrictive isolation level. The transaction holds read locks on all rows it references and writes locks on referenced rows for update and delete actions. Since other transactions cannot read, update or delete these rows, consequently it avoids non-repeatable read.

- Serializable – This is the highest isolation level. A serializable execution is guaranteed to be serializable. Serializable execution is defined to be an execution of operations in which concurrently executing transactions appears to be serially executing.

The SQL standard defines four Isolation levels:

- READ_UNCOMMITTED

- READ_COMMITTED

- REPEATABLE_READ or SNAPSHOT ISOLATION

- SERIALIZABLE

All but the SERIALIZABLE level are subject to data anomalies (phenomena) that might occur according to the following pattern:

| ISOLATION LEVEL | DIRTY READ | NON-REPEATABLE READ | PHANTOM READ |

|---|---|---|---|

| READ_UNCOMMITTED | allowed | allowed | allowed |

| READ_COMMITTED | prevented | allowed | allowed |

| REPEATABLE_READ | prevented | prevented | allowed |

| SERIALIZABLE | prevented | prevented | prevented |

1. Read uncommitted:

Dirty read

A dirty read happens when a transaction is allowed to read uncommitted changes of some other running transaction. This happens because there is no locking preventing it. In the picture above, you can see that the second transaction uses an inconsistent value as of the first transaction had rolled back.

Reading uncommitted data

As previously mentioned, all database changes are applied to the actual data structures (memory buffers, data blocks, indexes). A dirty read happens when a transaction is allowed to read the uncommitted changes of some other concurrent transaction.

Taking a business decision on a value that has not been committed is risky because uncommitted changes might get rolled back.

In the diagram above, the flow of statements goes like this:

- Alice and Bob start two database transactions.

- Alice modifies the title of a given

postrecord. - Bob reads the uncommitted

postrecord. - If Alice commits her transaction, everything is fine. But if Alice rolls back, then Bob will see a record version that no longer exists in the database transaction log.

This anomaly is only permitted by the Read Uncommitted isolation level, and, because of the impact on data integrity, most database systems offer a higher default isolation level.

How the database prevents it

To prevent dirty reads, the database engine must hide uncommitted changes from all other concurrent transactions. Each transaction is allowed to see its own changes because otherwise the read-your-own-writes consistency guarantee is compromised.

If the underlying database uses 2PL (Two-Phase Locking), the uncommitted rows are protected by write locks which prevent other concurrent transactions from reading these records until they are committed.

When the underlying database uses MVCC (Multi-Version Concurrency Control), the database engine can use the undo log which already captures the previous version of every uncommitted record, to restore the previous value in other concurrent transaction queries. Because this mechanism is used by all other isolation levels (Read Committed, Repeatable Read, Serializable), most database systems optimize the before image restoring process (lowering its overhead on the overall application performance).

2. Read committed:

Phantom read

A phantom read happens when a subsequent transaction inserts a row that matches the filtering criteria of a previous query executed by a concurrent transaction. We, therefore, end up using stale data, which might affect our business operation. This is prevented using range locks or predicate locking.

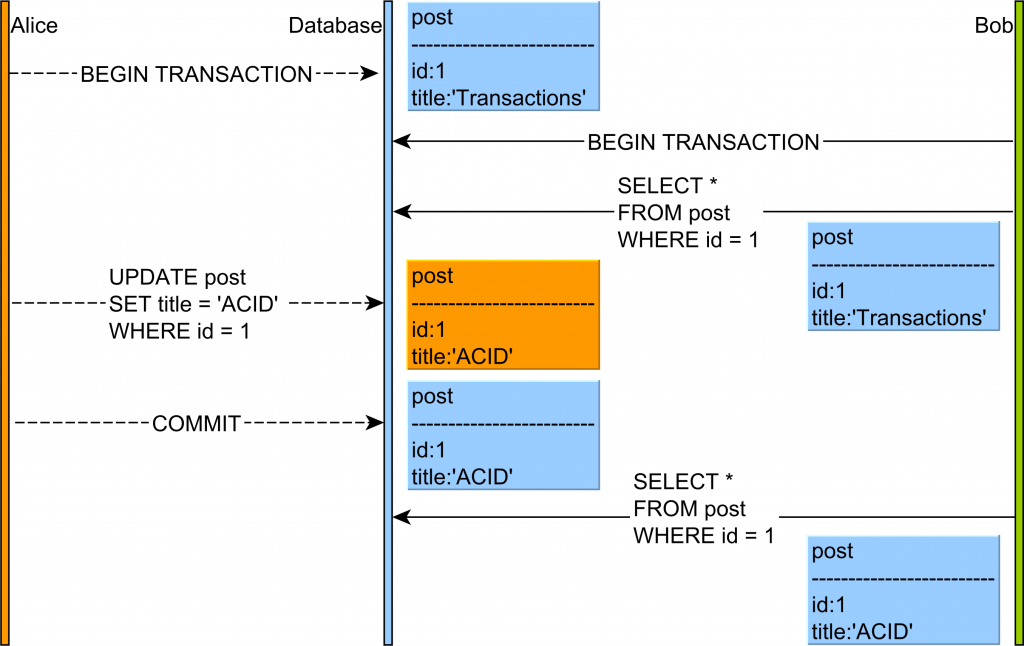

Observing data changed by a concurrent transaction

If one transaction reads a database row without applying a shared lock on the newly fetched record, then a concurrent transaction might change this row before the first transaction has ended.

In the diagram above, the flow of statements goes like this:

- Alice and Bob start two database transactions.

- Bob reads the

postrecord andtitlecolumn value isTransactions. - Alice modifies the

titleof a givenpostrecord to the value ofACID. - Alice commits her database transaction.

- If Bob’s re-reads the

postrecord, he will observe a different version of this table row.

This phenomenon is problematic when the current transaction makes a business decision based on the first value of the given database row (a client might order a product based on a stock quantity value that is no longer a positive integer).

How the database prevents it

If a database uses a 2PL (Two-Phase Locking) and shared locks are taken on every read, this phenomenon will be prevented since no concurrent transaction would be allowed to acquire an exclusive lock on the same database record.

Most database systems have moved to an MVCC (Multi-Version Concurrency Control) model, and shared locks are no longer mandatory for preventing non-repeatable reads.

By verifying the current row version, a transaction can be aborted if a previously fetched record has changed in the meanwhile.

Repeatable Read and Serializable prevent this anomaly by default. With Read Committed, it is possible to avoid non-repeatable (fuzzy) reads if the shared locks are acquired explicitly (e.g. SELECT FOR SHARE).

Some ORM frameworks (e.g. JPA/Hibernate) offer application-level repeatable reads. The first snapshot of any retrieved entity is cached in the currently running Persistence Context.

Any successive query returning the same database row is going to use the very same object that was previously cached. This way, the fuzzy reads may be prevented even in Read Committed isolation level.

Conclusion

This phenomenon is typical for both Read Uncommitted and Read Committed isolation levels. The problem is that Read Committed is the default isolation level for many RDBMS like Oracle, SQL Server or PostgreSQL, so this phenomenon can occur if nothing is done to prevent it.

Nevertheless, preventing this anomaly is fairly simple. All you need to do is use a higher isolation level like Repeatable Read (which is the default in MySQL) or Serializable. Or, you can simply lock the database record using a share(read) lock or an exclusive lock if the underlying database does not support shared locks (e.g. Oracle).

3.

Phantom read

A phantom read happens when a subsequent transaction inserts a row that matches the filtering criteria of a previous query executed by a concurrent transaction. We, therefore, end up using stale data, which might affect our business operation. This is prevented using range locks or predicate locking.

Observing data changed by a concurrent transaction

If a transaction makes a business decision based on a set of rows satisfying a given predicate, without range locks, a concurrent transaction might insert a record matching that particular predicate.

In the diagram above, the flow of statements goes like this:

- Alice and Bob start two database transactions.

- Bob’s reads all the

post_commentrecords associated with thepostrow with the identifier value of 1. - Alice adds a new

post_commentrecord which is associated with thepostrow having the identifier value of 1. - Alice commits her database transaction.

- If Bob’s re-reads the

post_commentrecords having thepost_idcolumn value equal to 1, he will observe a different version of this result set.

This phenomenon is problematic when the current transaction makes a business decision based on the first version of the given result set.

How the database prevents it

The SQL standard says that Phantom Read occurs if two consecutive query executions render different results because a concurrent transaction has modified the range of records in between the two calls.

Although providing consistent reads is a mandatory requirement for Serializability, that is not sufficient. For instance, one buyer might purchase a product without being aware of a better offer that was added right after the user has finished fetching the offer list.

The 2PL-based Serializable isolation prevents Phantom Reads through the use of predicate locking while MVCC (Multi-Version Concurrency Control) database engines address the Phantom Read anomaly by returning consistent snapshots.

However, a concurrent transaction can still modify the range of records that was read previously. Even if the MVCC database engine introspects the transaction schedule, the outcome is not always the same as a 2PL-based implementation. One such example is when the second transaction issues an insert without reading the same range of records as the first transaction. In this particular use case, some MVCC database engines will not end up rolling back the first transaction.

Conclusion

This phenomenon is typical for both Read Uncommitted, Read Committed and Repeatable Read isolation levels. The default isolation level being either Read Committed (Oracle, SQL Server or PostgreSQL) or Repeatable Read (MySQL) does not prevent this anomaly.

Nevertheless, preventing this anomaly is fairly simple. All you need to do is use a higher isolation level like Serializable. Or, if the underlying RDBMS supports predicate locks, you can simply lock the range of records using a share (read) lock or an exclusive (write) range lock as explained in this article.

4.

@Transactional annotationu bazanin default isolation leveli nedirse onu istifade edir.

| DATABASE | DEFAULT ISOLATION LEVEL |

|---|---|

| Oracle | READ_COMMITTED |

| MySQL | REPEATABLE_READ |

| Microsoft SQL Server | READ_COMMITTED |

| PostgreSQL | READ_COMMITTED |

| DB2 | CURSOR STABILITY |

--------------------------------------------------------------------------------------------------------------------------------

There are 4 types of references: strong, weak, soft, phantom

Strong reference

String a = new String(); // strong reference - hecvaxt silinmir

Weak reference

L1 Cache (EntityMangerCache) - weak reference iwledir.

WeakReference<String> weakReference = new WeakReference<>(new String("Hello"));

System.out.println("Before gc: " + weakReference.get());

System.gc();

System.out.println("After gc: " + weakReference.get());

package com.javatechie.flightserviceexample;

public class Person {

private String name;

public Person(String name) {

this.name = name;

}

public String getName() {

return name;

}

@Override

protected void finalize() throws Throwable {

System.out.println("Person is destroyed");

}

}

package com.javatechie.flightserviceexample;

import java.lang.ref.WeakReference;

public class Main {

public static void main(String[] args) {

WeakReference<Person> weakReference = new WeakReference<>(new Person("Parvin"));

System.out.println("Before gc: " + weakReference.get());

System.gc();

System.out.println("After gc: " + weakReference.get());

}

}

Soft reference:

L2 Cache(EntityManagerFactory cache) - uses soft reference

package com.javatechie.flightserviceexample;

import java.lang.ref.SoftReference;

public class Main {

public static void main(String[] args) {

SoftReference<Person> softReference = new SoftReference<>(new Person("Parvin"));

System.out.println("Before gc: " + softReference.get());

System.gc();

System.out.println("After gc: " + softReference.get());

}

}

Phantom reference:

--------------------------------------------------------------------------------------------------------------------------------

Redis:

gradle dependencies:

// redis

implementation 'org.springframework.boot:spring-boot-starter-data-redis'

implementation 'org.springframework.boot:spring-boot-starter-cache'

implementation 'redis.clients:jedis'

application-cache.yaml

spring:

cache:

type: redis

redis:

time-to-live: 60000

redis:

host: ${HOST:localhost}

port: ${PORT:6379}

password: ${PASSWORD:}

docker-compose.yaml

version: '3.8'

services:

db:

image: mysql

environment:

- MYSQL_DATABASE=mysql

- MYSQL_ROOT_PASSWORD=password

ports:

- '3306:3306'

volumes:

- db:/var/lib/mysql

redis:

image: redis

hostname: redis

container_name: redis

ports:

- '6379:6379'

volumes:

- db:/var/lib/redis

volumes:

db:

package com.javatechie.flightserviceexample.config;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.cache.RedisCacheConfiguration;

import org.springframework.data.redis.connection.RedisStandaloneConfiguration;

import org.springframework.data.redis.connection.jedis.JedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.GenericJackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import java.time.Duration;

@Data

@Configuration

@ConfigurationProperties("redis")

@EnableConfigurationProperties(RedisConfiguration.class)

public class RedisConfiguration {

private static final long EXPIRE_TIME = 600;

private String host;

private Integer port;

private String password;

@Bean

public JedisConnectionFactory jedisConnectionFactory() {

RedisStandaloneConfiguration configuration = new RedisStandaloneConfiguration();

configuration.setHostName(host);

configuration.setPort(port);

configuration.setPassword(password);

return new JedisConnectionFactory(configuration);

}

@Bean

public RedisTemplate<String, Object> redisTemplate() {

final RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(jedisConnectionFactory());

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

template.setKeySerializer(stringRedisSerializer);

template.setValueSerializer(new GenericJackson2JsonRedisSerializer());

template.setHashKeySerializer(stringRedisSerializer);

template.afterPropertiesSet();

return template;

}

@Bean

public RedisCacheConfiguration cacheConfiguration() {

RedisCacheConfiguration cacheConfiguration = RedisCacheConfiguration.defaultCacheConfig()

.entryTtl(Duration.ofSeconds(EXPIRE_TIME));

cacheConfiguration.usePrefix();

return cacheConfiguration;

}

}

terminaldan redise daxil olmaq ucun:

1) docker exec -it {container id} /bin/sh

2) redis-cli

3) keys *

--------------------------------------------------------------------------------------------------------------------------------

***Data itkisi:

@SneakyThrows

// @Transactional

public void transferWithAnnotation(Double amount) {

log.info("Thread id is : {} ", Thread.currentThread().getId());

Account source = accountRepository.findById(1L).get();

if (source.getBalance() < amount) {

throw new RuntimeException("Insufficient amount");

}

log.info("Thread id retrieved account 1 is : {}, {}", Thread.currentThread().getId(), source.getBalance());

Thread.sleep(10000);

Account target = accountRepository.findById(2L).get();

log.info("Thread id retrieved account 2 is : {}, {}", Thread.currentThread().getId(), target.getBalance());

source.setBalance(source.getBalance() - amount);

target.setBalance(target.getBalance() + amount);

log.info("Thread completed: {}", Thread.currentThread().getId());

accountRepository.save(source);

accountRepository.save(target);

// throw new RuntimeException();

}

@Override

// @Transactional

public void run(String... args) throws Exception {

System.out.println("Start--------------------------------------------------------------");

Runnable runnable = new Runnable() {

@Override

public void run() {

transferService.transferWithAnnotation(100D);

}

};

Thread thread1 = new Thread(runnable);

thread1.start();

Thread.sleep(5000);

Thread thread2 = new Thread(runnable);

thread2.start();

System.out.println("Finish-------------------------------------------------------------");

}

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

In the context of database transactions and concurrency control, there are several phenomena related to data consistency and isolation. The main ones, often referred to as "isolation anomalies," include:

Lost Update: When two transactions concurrently update the same data, and one of the updates is lost, resulting in only one of the updates being reflected in the final state of the data.

Non-Repeatable Read: When a transaction reads the same data multiple times but gets different values each time, typically because another transaction has updated the data in between the reads.

Phantom Read: When a transaction reads a set of rows that satisfy a certain condition, but another transaction inserts or deletes rows that also satisfy that condition, causing the first transaction to see "phantom" rows in subsequent reads.

Dirty Read: When one transaction reads data that has been modified by another transaction but has not yet been committed, potentially leading to incorrect or inconsistent results if the modifying transaction is rolled back.

These phenomena are categorized based on the types of anomalies they represent and are used to describe the behavior of transactions under different isolation levels in database systems. Different isolation levels provide different guarantees against these anomalies, with higher isolation levels generally providing stronger consistency guarantees but potentially at the cost of performance or concurrency.

--------------------------------------------------------------------------------------------------------------------------------

package az.turbo.jprofiler.service.impl;

import az.turbo.jprofiler.entity.Person;

import az.turbo.jprofiler.repository.PersonRepository;

import az.turbo.jprofiler.service.PersonService;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

@Service

@RequiredArgsConstructor

public class PersonServiceImpl implements PersonService {

private final PersonRepository personRepository;

@Override

@Transactional

public void save() {

try {

for (int i = 0; i < 10; i++) {

Person person = new Person();

person.setName("test : " + i);

personRepository.save(person);

if (i == 7) throw new RuntimeException("Exception");

}

} catch (RuntimeException e) {

System.out.println(e.getMessage());

e.printStackTrace();

}

}

}

veya

package az.turbo.jprofiler.service.impl;

import az.turbo.jprofiler.entity.Person;

import az.turbo.jprofiler.repository.PersonRepository;

import az.turbo.jprofiler.service.PersonService;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

@Service

@RequiredArgsConstructor

public class PersonServiceImpl implements PersonService {

private final PersonRepository personRepository;

@Override

@Transactional

public void save() {

try {

for (int i = 0; i < 10; i++) {

Person person = new Person();

person.setName("test : " + i);

personRepository.save(person);

if (i == 7) throw new RuntimeException("Exception");

}

} catch (Exception e) {

System.out.println(e.getMessage());

e.printStackTrace();

}

}

}

oldugu halda rollback olmur. Sebebi ise ozu exceptionu tutdugu ucun rollback olmur. Yeni exception metoddan kenara cixmadigi ucun aop terefinden tutulmayacaq ve rollback olmayacaq.

*** Default propogation level is Required:

package az.turbo.jprofiler.service.impl;

import az.turbo.jprofiler.entity.Person;

import az.turbo.jprofiler.repository.PersonRepository;

import az.turbo.jprofiler.service.PersonService;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Propagation;

import org.springframework.transaction.annotation.Transactional;

@Service

@RequiredArgsConstructor

public class PersonServiceImpl implements PersonService {

private final PersonRepository personRepository;

@Override

@Transactional(propagation = Propagation.REQUIRED)

public void save() throws Exception{

for (int i = 0; i < 10; i++) {

Person person = new Person();

person.setName("test : " + i);

personRepository.save(person);

if (i == 7) throw new Exception("Exception");

}

}

}

*** PropogationLevel = RequiresNew

package az.turbo.jprofiler.service.impl;

import az.turbo.jprofiler.service.PersonService;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import org.springframework.transaction.support.TransactionSynchronizationManager;

@Service

@RequiredArgsConstructor

public class TestService {

private final PersonService personService;

@Transactional

public void foo() throws Exception {

String transactionName = TransactionSynchronizationManager.getCurrentTransactionName();

System.out.println("TestService: " + transactionName);

personService.save();

}

}

package az.turbo.jprofiler.service.impl;

import az.turbo.jprofiler.entity.Person;

import az.turbo.jprofiler.repository.PersonRepository;

import az.turbo.jprofiler.service.PersonService;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Propagation;

import org.springframework.transaction.annotation.Transactional;

import org.springframework.transaction.support.TransactionSynchronizationManager;

@Service

@RequiredArgsConstructor

public class PersonServiceImpl implements PersonService {

private final PersonRepository personRepository;

@Override

@Transactional(propagation = Propagation.REQUIRES_NEW)

public void save() throws Exception{

String transactionName = TransactionSynchronizationManager.getCurrentTransactionName();

System.out.println("PersonServiceImpl: " + transactionName);

for (int i = 1; i < 10; i++) {

Person person = new Person();

person.setName("test : " + i);

personRepository.save(person);

if (i == 7) throw new RuntimeException("Exception");

}

}

@Transactional

public void foo() {

for (int i = 1; i < 10; i++) {

Person person = new Person();

person.setName("test : " + i);

personRepository.save(person);

if (i == 7) throw new RuntimeException("Exception");

}

}

}

Комментарии

Отправить комментарий